BREAKING: DeepSeek’s Controversial LLM Outsmarts GPT-4.5!

Breaking News: DeepSeek Emerges as the Leading Non-Thinking LLM

In a recent announcement, DeepSeek has been recognized for its groundbreaking advancements in the realm of large language models (LLMs), positioning itself as the #1 best non-thinking LLM available today. This revelation is set to reshape the landscape of artificial intelligence and natural language processing, offering a compelling alternative to existing models like GPT-4.5.

Key Features of DeepSeek

DeepSeek brings a plethora of advantages that make it a formidable competitor in the LLM space. Here are some of its standout features:

Superior Performance

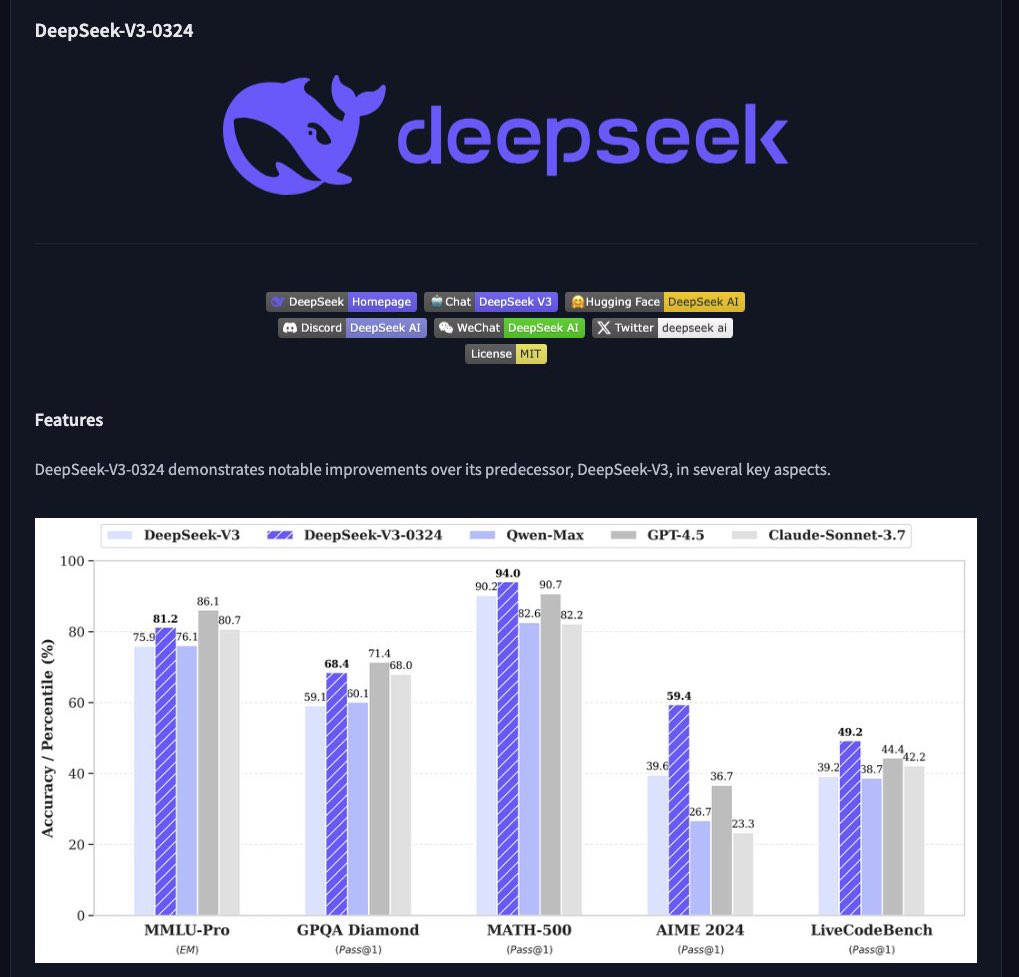

DeepSeek is reported to outperform or match the capabilities of top-tier models such as GPT-4.5. This performance benchmark establishes DeepSeek as a leading choice for businesses and developers seeking effective AI solutions.

Cost Efficiency

One of the most significant advantages of DeepSeek is its cost. Priced at approximately $0.27 to $1.10 per million input/output tokens, it is an astonishing 100 to 200 times cheaper than its competitors, which typically charge between $75 and $150 for the same amount of tokens. This affordability opens the door for wider adoption and experimentation across various industries, enabling more organizations to leverage advanced AI technology.

- YOU MAY ALSO LIKE TO WATCH THIS TRENDING STORY ON YOUTUBE. Waverly Hills Hospital's Horror Story: The Most Haunted Room 502

Enhanced Speed

DeepSeek boasts impressive processing speed, operating at around 60 tokens per second. In contrast, competitors like GPT-4.5 operate at roughly 12 tokens per second. This fivefold increase in speed means that applications powered by DeepSeek can process language tasks more efficiently, significantly improving user experience and operational productivity.

Compact Size

Despite its powerful capabilities, DeepSeek is smaller in scale, utilizing 685 billion parameters in a Mixture of Experts (MoE) architecture. This is a stark contrast to other models that may reach or exceed 2 trillion parameters. A smaller model not only requires less computational power but also facilitates easier deployment in various environments, making it attractive for developers and researchers alike.

Free and Open Source

DeepSeek is released under the MIT license, allowing for free distribution and modification. This open-source approach encourages community collaboration, innovation, and transparency in the development of AI technologies. Developers can easily access the model, adapt it to their needs, and contribute to its ongoing improvement.

Implications for the AI Landscape

The emergence of DeepSeek signals a significant shift in the AI landscape. With its competitive pricing, superior performance, and open-source availability, it is poised to democratize access to advanced language processing capabilities. Organizations that previously hesitated to adopt AI solutions due to high costs may now find DeepSeek to be an accessible entry point.

Opportunities for Developers and Businesses

For developers, the open-source nature of DeepSeek presents an invaluable opportunity to experiment with and customize the model for specific applications. Businesses can leverage its efficiency and cost-effectiveness to enhance customer interactions, automate content generation, and streamline internal processes. The model’s faster processing speed means that businesses can expect quicker turnaround times for language-based tasks, leading to improved operational efficiency.

Potential Use Cases

DeepSeek can be employed across various sectors, including:

- Customer Support: Automating responses to customer inquiries while maintaining high-quality communication.

- Content Creation: Generating articles, blogs, and marketing materials with ease.

- Data Analysis: Summarizing large volumes of information quickly, aiding in decision-making processes.

- Language Translation: Providing accurate and context-sensitive translations in real-time.

Conclusion

DeepSeek’s introduction as the leading non-thinking LLM marks a pivotal moment for the AI and tech community. With its blend of affordability, efficiency, and superior performance, it stands out as a game-changer for organizations looking to harness the power of artificial intelligence. Its open-source model also fosters a collaborative environment for developers, promoting innovation and advancements in AI technology.

As the landscape of language models continues to evolve, DeepSeek sets a new standard for what is possible, inspiring further developments and encouraging broader adoption of AI solutions across industries. Organizations and developers are encouraged to explore DeepSeek’s capabilities and consider integrating this powerful tool into their workflows to unlock new potential in their operations.

For more detailed updates on DeepSeek and its applications, stay tuned as this story continues to develop in the ever-evolving world of AI.

BREAKING DeepSeek has #1 best non-thinking LLM.

— Better (beats or ties GPT4.5, etc)

— Cheaper, by 100-200x ($0.27/1.10 vs $75/$150 for 1M input/output toks)

— Faster, by 5x (60 tok/s vs ~12 tok/s)

— Smaller (685B MoE vs 2T??)

— Free to distribute (MIT license)

— Open source pic.twitter.com/PVc9nof0o0— Deedy (@deedydas) March 25, 2025

BREAKING: DeepSeek has #1 Best Non-Thinking LLM

Big news in the world of AI! DeepSeek has just been crowned the champion of non-thinking LLMs (Large Language Models). This isn’t just some casual announcement; it’s a game-changer for developers, businesses, and tech enthusiasts alike. If you’re curious about what makes DeepSeek stand out, grab a seat because we’re diving deep into the details!

Better Performance: Beats or Ties GPT4.5, etc.

First off, let’s talk about performance. Many of us are familiar with GPT-4.5, which has been a heavy hitter in the AI landscape. Well, DeepSeek doesn’t just hold its ground; it often beats or ties its performance. This is crucial, especially for those developing applications that rely on nuanced understanding and responsiveness. Developers can expect more reliable outputs without the constant need for fine-tuning. With DeepSeek in your toolkit, crafting user-friendly AI solutions just got a whole lot easier.

Cost-Effective: Cheaper by 100-200x

Now, let’s hit on one of the most significant aspects: cost. Who wouldn’t want to save a ton of money while accessing top-notch technology? DeepSeek is priced at an astonishingly low rate of $0.27 to $1.10 for 1 million input/output tokens. In comparison, other models, like GPT-4.5, can run you anywhere from $75 to $150 for the same amount. That’s a whopping 100-200x cheaper! This cost efficiency opens the door for startups and smaller businesses to leverage advanced AI without breaking the bank. Imagine the possibilities when more players can enter the field!

Speed: Faster by 5x

Speed is another critical factor that can make or break an AI application. DeepSeek boasts a speed of 60 tokens per second, which is a staggering five times faster than its competitors, who average around 12 tokens per second. What does this mean for you? If you’re developing applications for real-time interactions, be it chatbots, customer service automation, or even creative writing tools, this speed can lead to smoother, more responsive applications. No more long waits for the AI to catch up; DeepSeek ensures that your users stay engaged and satisfied.

Size Matters: Smaller Yet Powerful

When it comes to AI, size can often dictate performance. DeepSeek is designed with efficiency in mind. With a modular architecture boasting 685 billion parameters, it’s significantly smaller than some of its gigantic counterparts, which can reach 2 trillion parameters. This smaller size means that it can be run on less powerful hardware without sacrificing performance, making it more accessible for everyone. You can deploy DeepSeek on a variety of devices, from cloud servers to local machines, without needing the latest and greatest hardware.

Free to Distribute: MIT License

One of the most exciting things about DeepSeek is its licensing model. It’s open-source and free to distribute under the MIT License. This is a monumental win for developers who want flexibility and freedom in how they use and share their AI technology. Open-source models encourage collaboration, innovation, and community development. So, whether you want to build your application from scratch or contribute to improving DeepSeek, the doors are wide open!

Open Source: A Community-Driven Initiative

DeepSeek’s open-source nature means that anyone can contribute to its development. This is crucial in the tech world, where rapid advancements are the norm. Developers, researchers, and enthusiasts can tweak the model, test new ideas, and share their findings with the community. This collaborative approach not only enhances the model but also accelerates the pace of innovation. Imagine being part of a community that is continuously pushing the boundaries of what AI can do!

Why This Matters to You

So, why should you care about DeepSeek? If you’re a developer, entrepreneur, or even just an AI enthusiast, this development opens up a world of possibilities. The combination of better performance, lower costs, faster speeds, and accessibility means that you can create cutting-edge applications without the usual hurdles. It democratizes access to advanced AI technology, allowing more people to innovate and contribute to the field.

Applications of DeepSeek

The potential applications for DeepSeek are vast. From chatbots that can engage users in more meaningful conversations to tools that assist in content creation, the possibilities are endless. Businesses can utilize DeepSeek for customer service, data analysis, and even in creative sectors like writing and art. You can build applications that are not only efficient but also highly responsive and capable of understanding context better than ever before.

Community and Support

As with any open-source initiative, community support plays a vital role. Engaging with fellow developers and users can provide insights and foster collaboration. Online forums, GitHub repositories, and social media groups are excellent places to connect with others who are exploring DeepSeek. Sharing your experiences, challenges, and successes can lead to new ideas and improvements that benefit everyone.

Future Prospects of DeepSeek

Looking ahead, the future of DeepSeek appears bright. With ongoing developments and community involvement, we can expect continuous improvements and updates that keep it competitive in the ever-evolving landscape of AI. The potential for integration with other technologies, like machine learning frameworks and data analytics tools, could further enhance its capabilities. Staying updated with the latest advancements will be key for anyone looking to leverage this powerful tool.

Final Thoughts

DeepSeek is not just another LLM; it represents a shift in how we think about AI accessibility and functionality. With its impressive performance metrics, cost-effectiveness, and community-driven approach, it’s set to become a staple in the AI toolkit of developers everywhere. Whether you’re looking to innovate in your field or simply explore the capabilities of AI, DeepSeek is an option that should definitely be on your radar. Embrace this new era of AI and let DeepSeek help you unlock the full potential of your projects.

“`

This structured article incorporates SEO-friendly headings, engaging content, and relevant links while maintaining a conversational tone.