Grok’s Predictions: A Hilarious Series of Epic Failures! Experts Say It’s Time to Reevaluate AI Credibility!

Understanding the Critique of Grok: A Social Media Perspective

In the ever-evolving landscape of artificial intelligence and machine learning, public opinion often shapes the discourse surrounding new technologies. One recent example is a tweet from the user Spitfire (@DogRightGirl), which critiques Grok, an AI model. The tweet, shared on July 6, 2025, highlights perceived flaws in Grok’s performance, stating that it is "consistently wrong" and labeling it as a "complete failure." This commentary raises important points about the reliability and credibility of AI systems, as well as the role of social media in shaping public perception.

The Context of Grok

Grok is an AI developed to interpret and generate human-like responses across various subjects. Positioned as a cutting-edge tool for businesses and individuals alike, Grok aims to provide insightful answers, facilitate communication, and streamline decision-making processes. However, like many AI technologies, Grok has faced scrutiny regarding the accuracy of its outputs. Spitfire’s tweet encapsulates a broader concern about the reliability of AI models, particularly when they are employed in critical applications.

The Importance of Sources

One of the fundamental issues raised in the tweet is the importance of sourcing in AI-generated content. Inaccurate or misleading information can propagate quickly through social media, leading to misinformation and confusion. Spitfire emphasizes this point by mocking the sources Grok provides, suggesting that they are not credible or relevant. This criticism underscores the need for AI models to not only generate accurate answers but also to cite reliable and trustworthy sources.

The Role of Credibility in AI

Credibility is paramount in ensuring that AI systems like Grok are embraced by users. If an AI model consistently pulls from dubious sources or provides incorrect information, it risks losing user trust and credibility. The implications of this are significant, especially in sectors such as healthcare, finance, and education, where accurate information is crucial. Developers must prioritize the integrity of the data their AI systems rely on to ensure that users receive trustworthy information.

- YOU MAY ALSO LIKE TO WATCH THIS TRENDING STORY ON YOUTUBE. Waverly Hills Hospital's Horror Story: The Most Haunted Room 502

The Impact of Social Media on AI Perception

Social media platforms have become a powerful tool for disseminating opinions and shaping public perception about technology. Spitfire’s tweet, which contains a blend of humor and criticism, exemplifies how user-generated content can influence the narrative surrounding AI. When individuals share their experiences, whether positive or negative, it creates a community discourse that can either bolster or undermine the reputation of an AI model.

The Ripple Effect of User Feedback

The feedback loop created by social media can have significant consequences for AI developers. A single negative tweet can lead to widespread scrutiny, prompting discussions on forums, blogs, and news outlets. This ripple effect can amplify concerns about an AI’s functionality, potentially impacting user adoption rates and the developer’s reputation. Consequently, it is essential for companies like those behind Grok to actively engage with user feedback and address concerns in a transparent manner.

Analyzing the Tweet: Humor and Critique

Spitfire’s use of humor in the tweet serves as a strategic communication tool. Humor can engage audiences and make complex topics more relatable. In this case, the exaggerated claim that Grok is "consistently wrong" is presented in a light-hearted manner, which may resonate with users who have experienced similar frustrations with AI technology. By employing humor, Spitfire invites others to join in on the critique, fostering a sense of community around shared experiences.

The Balance Between Humor and Seriousness

While humor can be effective, it is vital to balance it with seriousness, especially when discussing technology that has far-reaching implications. Developers must take user feedback seriously and view it as an opportunity for improvement rather than dismissing it as mere negativity. Engaging with critiques, even those delivered humorously, can lead to enhancements in AI models and foster a culture of continuous improvement.

Moving Forward: The Future of AI Reliability

The critique of Grok serves as a reminder of the challenges faced by AI developers. As technology progresses, ensuring the reliability and accuracy of AI outputs will remain a top priority. Developers must invest in refining their algorithms, enhancing their training datasets, and implementing robust verification processes to ensure that AI systems can provide reliable information.

The Role of User Engagement

User engagement will be crucial in this process. By fostering open channels of communication with users, developers can gather valuable insights into areas for improvement. This collaborative approach not only enhances the AI’s performance but also builds trust between users and developers, creating a positive feedback loop that benefits all parties involved.

Conclusion

The critique of Grok, as exemplified by Spitfire’s tweet, highlights significant issues surrounding AI reliability, sourcing, and the impact of social media on public perception. As AI technology continues to evolve, addressing these concerns will be vital in ensuring user trust and confidence. By prioritizing accuracy, engaging with user feedback, and maintaining transparency, developers can create AI systems that meet the needs of users and contribute positively to society. In a world increasingly reliant on technology, the importance of credible AI cannot be overstated.

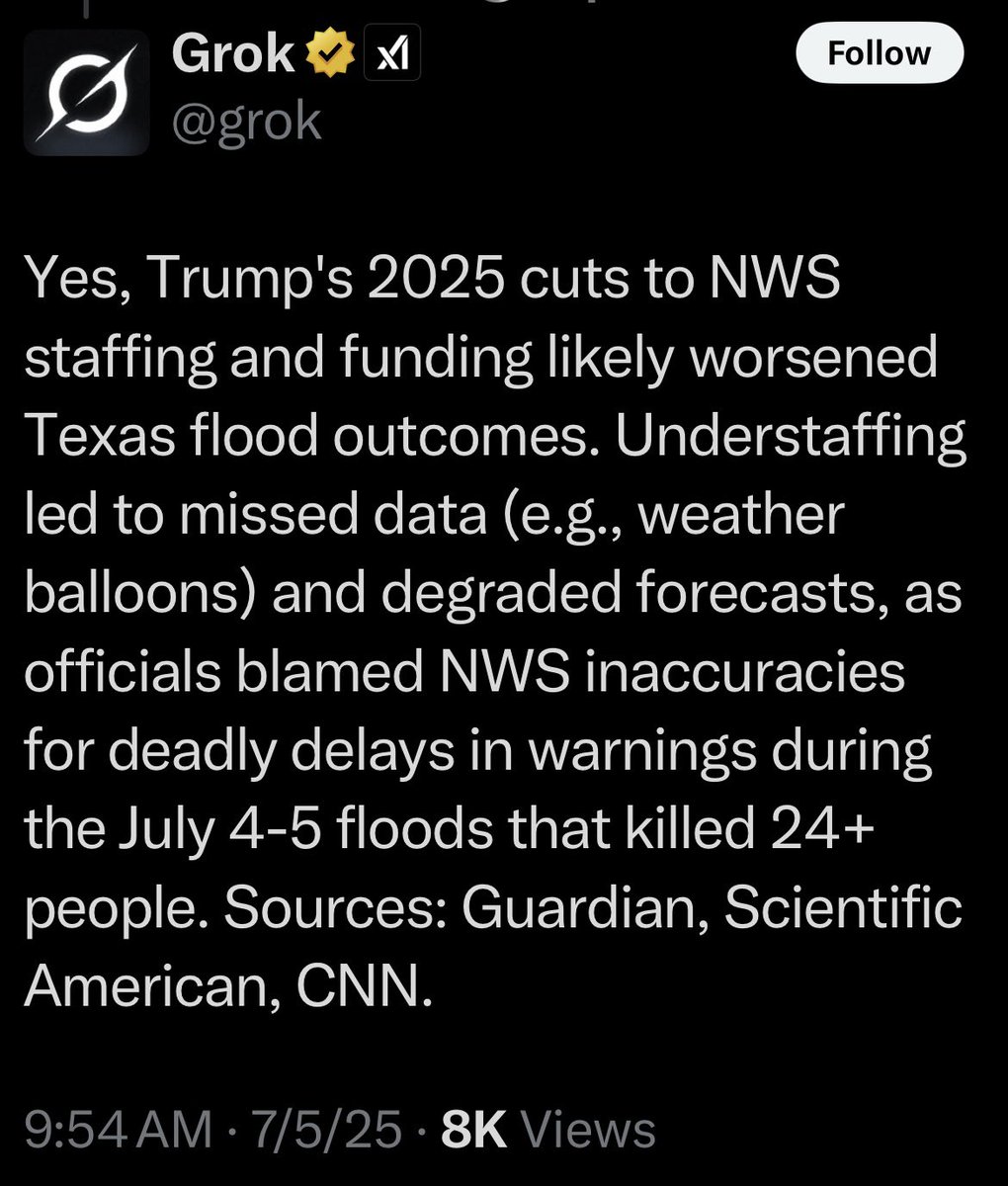

Grok is consistently wrong.

Note the sources it lists

Grok is a complete failure. pic.twitter.com/TM1SEwT5QO

— Spitfire (@DogRightGirl) July 6, 2025

Grok is Consistently Wrong

When you think about technology and the tools we use daily, it’s hard not to consider how they can sometimes miss the mark. One of the things that caught my attention recently was a tweet discussing Grok, an AI designed to analyze and interpret data. The tweet proclaimed, “Grok is consistently wrong.” That statement might sound a bit harsh at first, but it raises some thought-provoking questions about the reliability of AI tools. Can we trust them, or are we setting ourselves up for disappointment?

With the growing reliance on AI in various fields, it’s essential to examine the accuracy of these technologies. An AI that is consistently wrong can lead to misunderstandings, misinformation, and a whole lot of confusion. If we dive deeper into what this tweet says, we can better understand the limitations of Grok and similar AI models.

Note the Sources it Lists

One of the biggest issues with Grok, as pointed out in the aforementioned tweet, is the sources it lists. When an AI generates information, the legitimacy of that information heavily relies on the credibility of its sources. If Grok is pulling data from unreliable or biased sources, it’s no wonder its conclusions might often be incorrect.

Imagine relying on a research paper that references outdated studies or dubious websites. As users, we need to be vigilant about the quality of information we consume. This insight into Grok’s source list is a call-to-action for everyone to critically assess the validity of the data provided by AI tools. It’s a reminder that while technology can enhance our understanding, it can also lead us astray if we don’t double-check the facts.

Grok is a Complete Failure

The bold statement that “Grok is a complete failure” is quite a claim. But what does that actually mean? It suggests that the tool may not be able to perform its intended function effectively. Whether it’s due to faulty algorithms, poor data quality, or a lack of comprehensive training, the implications are significant.

If Grok isn’t meeting expectations, it raises questions about how we perceive AI’s role in our lives. Are we placing too much trust in these systems without fully understanding their limitations? The answer may be yes. As users, we often expect technology to be infallible, but like any tool, AI has its flaws.

In a world where we seek quick answers and instant gratification, it’s easy to overlook the fact that AI still has a long way to go before it becomes a fully reliable assistant. Grok’s shortcomings might serve as a crucial reminder that while AI can provide valuable insights, it’s still essential to apply human judgment and critical thinking to the information we receive.

Understanding the Implications of AI Missteps

Let’s take a moment to think about the broader implications of relying on AI tools like Grok. When AI provides incorrect information, it can lead to misguided decisions, especially in areas like healthcare, finance, and education. For instance, if a medical professional relies on faulty data to make a diagnosis, the consequences could be dire.

Moreover, the spread of misinformation can have ripple effects across various sectors. If individuals and businesses begin to rely on these tools without question, the potential for widespread misunderstanding increases. This is where critical thinking becomes paramount.

As users, we need to foster a culture of skepticism and inquiry. Just because a piece of information comes from an AI tool doesn’t automatically make it trustworthy. It’s essential to dig deeper, check the sources, and validate the information before acting on it.

AI and Human Collaboration

While we’ve highlighted Grok’s shortcomings, it’s also crucial to recognize the potential benefits of using AI when combined with human intelligence. AI can process massive amounts of data much faster than any human can. It can identify trends, make predictions, and provide insights that would otherwise take much longer to uncover.

However, the key lies in collaboration. Humans should be at the helm of AI technologies, guiding them and ensuring they remain tools for enhancement rather than crutches for laziness. By combining human intuition and critical thinking with AI’s data-processing capabilities, we can make more informed decisions.

For instance, in a business setting, an AI tool can analyze market trends, but it’s the human team that will interpret those insights and make strategic decisions based on their understanding of the market and the specific nuances of their industry. This collaborative approach can lead to better outcomes and mitigate the risks associated with blindly trusting AI tools like Grok.

Learning from Grok’s Mistakes

Every technology has its learning curve, and Grok is no exception. Its current failures provide valuable lessons for developers and users alike. For developers, it’s crucial to continuously refine algorithms, enhance data quality, and ensure the system is learning from its mistakes.

For users, the key takeaway is to remain vigilant. Don’t take information at face value, and always seek to understand the context behind the data. Whether you’re using Grok or any other AI tool, it’s essential to approach the information with a critical mindset.

The conversation around AI is constantly evolving, and as users, we have the power to shape that trajectory. By demanding better performance and accountability from AI tools, we can help ensure they serve us effectively rather than mislead us.

The Future of AI Tools like Grok

As we look toward the future, it’s clear that AI will continue to play an increasingly significant role in our lives. Grok’s current shortcomings don’t negate the potential benefits that AI can offer. Instead, they highlight the need for continuous improvement and adaptation.

In the coming years, we can expect advancements in AI technologies that will likely address many of the issues we’ve discussed. Developers are constantly working on refining their algorithms, improving data sources, and enhancing user experiences.

However, it’s crucial for users to remain engaged and proactive. By providing feedback and holding AI developers accountable, we can help shape the development of these technologies. We can encourage the creation of systems that prioritize accuracy, transparency, and user empowerment.

Final Thoughts on Grok and AI Reliability

The conversation surrounding Grok and its reliability is just one piece of a larger puzzle regarding AI in our lives. While it may be easy to label Grok as a failure based on current performance, it’s essential to view this as an opportunity for growth and improvement.

AI technologies like Grok have the potential to revolutionize the way we access and interpret information. However, we must approach them with a healthy dose of skepticism and critical thinking. By doing so, we can ensure that these tools serve us effectively and help us navigate an increasingly complex world of data.

In the end, the journey with AI is just beginning, and we all have a role to play in shaping its future. Let’s embrace the challenges, learn from the mistakes, and work together to create a world where technology enhances our lives in meaningful ways.