Breaking: ‘Context Rot’ Declared the Downfall of LLM Conversations!

Understanding "Context Rot" in Large Language Model Conversations

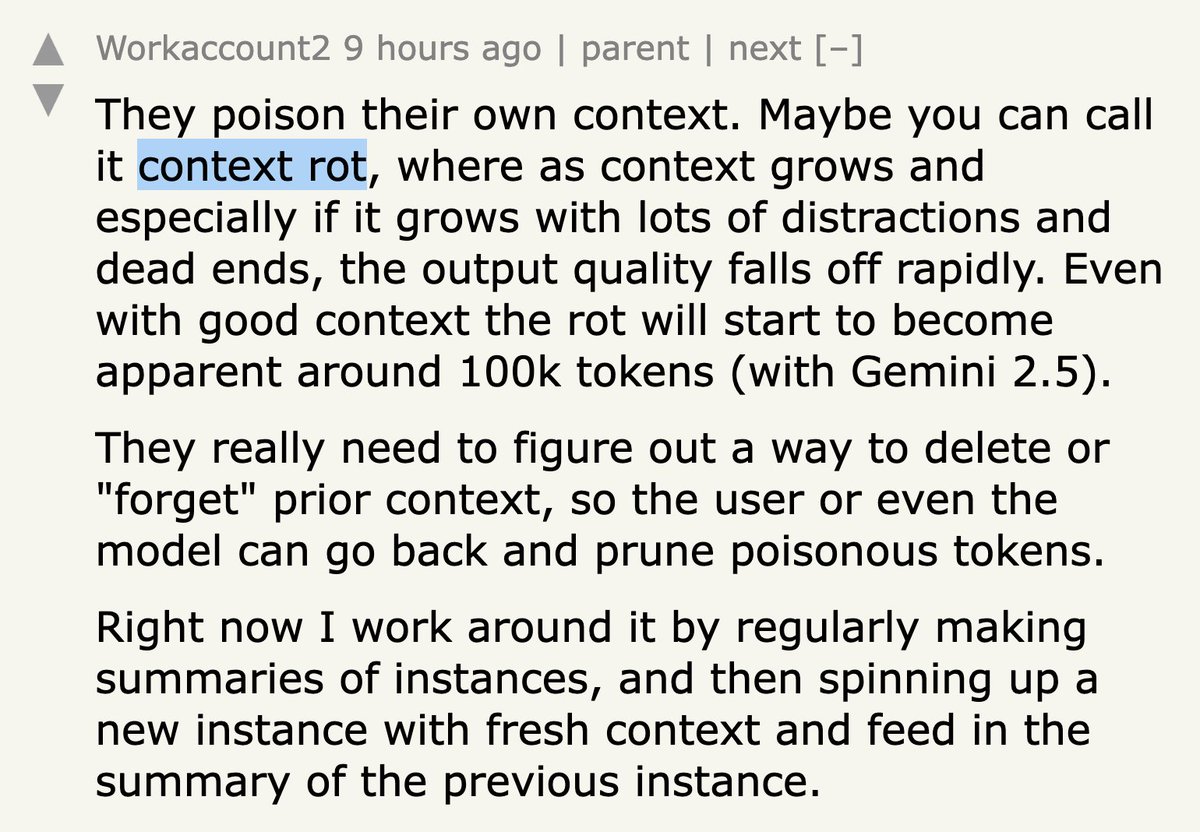

In the ever-evolving landscape of artificial intelligence, particularly in the realm of Large Language Models (LLMs), a new term has emerged: "context rot." Coined by a user named Workaccount2 on Hacker news, this term captures a phenomenon where the quality of conversations generated by LLMs deteriorates as the context becomes cluttered with distractions and irrelevant information. This summary will delve into the implications of context rot, its causes, and potential solutions, while also optimizing for search engine visibility.

What is Context Rot?

Context rot refers to the degradation in the quality of responses from LLMs as the dialogue progresses. As interactions accumulate, the context provided to the model may begin to include irrelevant statements, distractions, or off-topic discussions. This accumulation often leads to a convoluted conversation where the model struggles to maintain coherence and relevance. Understanding context rot is crucial for developers and users of LLMs, as it can significantly affect the output quality and user experience.

The Causes of Context Rot

Several factors contribute to the phenomenon of context rot:

- Accumulation of Irrelevant Information: As conversations progress, users may introduce topics that stray from the initial subject matter, leading to a buildup of unrelated information. This can create a confusing context for the model.

- Limited Context Window: Most LLMs operate within a fixed context window, meaning they can only process a certain amount of text at a time. When this limit is reached, older information may be discarded, but the remaining context may still be cluttered with distractions.

- User Input Variability: Different users may have varying communication styles, leading to inconsistencies in the context provided to the model. This variability can further exacerbate the issues of coherence and relevance.

- Complexity of Language: Natural language is inherently complex and nuanced. As conversations evolve, the model may struggle to understand the intended meaning behind increasingly complicated interactions.

The Impact of Context Rot

The presence of context rot can have several negative effects on LLM interactions:

- YOU MAY ALSO LIKE TO WATCH THIS TRENDING STORY ON YOUTUBE. Waverly Hills Hospital's Horror Story: The Most Haunted Room 502

- Decreased Coherence: As the context becomes muddled, the model’s responses may become less coherent, leading to confusion for the user.

- Increased Frustration: Users may become frustrated when they receive answers that are off-topic or irrelevant to their original question, which can diminish their overall experience with the AI.

- Reduced Trust: If users consistently encounter context rot, they may begin to lose trust in the capabilities of the LLM, potentially leading to decreased usage.

Strategies to Mitigate Context Rot

Addressing context rot is essential for enhancing the user experience and the effectiveness of LLMs. Here are several strategies that could be employed:

- Context Management Techniques: Implementing methods to manage and filter the context can help maintain relevance. This could involve summarizing previous interactions or focusing on the most pertinent information.

- Dynamic Context Windows: Developing models that can dynamically adjust their context windows based on the conversation flow might help in retaining relevant information while discarding distractions.

- User Guidance: Providing users with guidelines on how to interact with LLMs can help minimize irrelevant input. Encouraging concise and focused questions can lead to clearer contexts.

- Feedback Loops: Implementing systems that allow users to provide feedback on responses can help models learn and adapt to avoid context rot in future interactions.

The Future of LLM Interactions

As AI technology continues to advance, understanding and addressing context rot will be crucial for the development of more effective and user-friendly LLMs. By recognizing the factors that contribute to context rot and implementing strategies to mitigate its effects, developers can enhance the interaction quality and maintain user trust.

Conclusion

In conclusion, the concept of context rot sheds light on an important challenge faced by users and developers of Large Language Models. As conversations evolve, the risk of losing coherence due to irrelevant distractions becomes increasingly significant. By adopting strategies for better context management and user engagement, the impact of context rot can be minimized, leading to more productive and satisfying interactions with AI. As the field of AI continues to grow, addressing these challenges will be key to unlocking the full potential of LLMs and ensuring a positive user experience.

By optimizing for search engines, this summary aims to provide valuable insights into the concept of context rot, its implications, and potential solutions, making it easier for interested readers to find relevant information on this emerging topic in the AI community.

Workaccount2 on Hacker News just coined the term “context rot” to describe the thing where the quality of an LLM conversation drops as the context fills up with accumulated distractions and dead ends https://t.co/2oWaMhlZDi pic.twitter.com/8kZgHNHHG0

— Simon Willison (@simonw) June 18, 2025

Workaccount2 on Hacker News Just Coined the Term “Context Rot”

If you’re like me, you love diving into the world of AI and conversational models. But have you ever noticed that sometimes, as conversations with these models continue, the quality starts to slip? It’s as if the chat starts to get bogged down with irrelevant information and distractions. Recently, a user on Hacker News, Workaccount2, brought this phenomenon to light by coining the term “context rot.”

So, what exactly is “context rot”? Essentially, it refers to the decline in the quality of a conversation with a language model (LLM) as the context accumulates distractions and dead ends. This idea is pivotal as more people rely on AI for various tasks, from casual chats to serious research.

What is Context Rot?

“Context rot” happens when a conversation with an AI model becomes cluttered with unnecessary information. Imagine you’re talking to a friend about your favorite movies, but somehow, the discussion shifts to their pet’s dietary preferences. Now, you’re trying to steer the conversation back to movies, but the more you talk, the more the topic spins out of control. This is similar to what occurs in LLM interactions.

As conversations evolve, the model retains all previous exchanges. Over time, this can lead to a buildup of irrelevant context that muddles the AI’s responses. The result? Disjointed, confusing replies that don’t quite hit the mark.

For example, if you ask a model about climate change but previously discussed the latest trends in fashion, the AI might give you a response that feels oddly mixed, referencing fashion in a context where it doesn’t belong. This is context rot in action.

Why is Context Rot Important?

Understanding context rot is crucial for developers, researchers, and anyone using LLMs. The quality of AI interactions can significantly impact user experience, and if users find themselves sifting through irrelevant information to get to the point, they may become frustrated.

Moreover, as AI continues to be integrated into various sectors—from customer service to education—the importance of maintaining context clarity cannot be overstated. If users can’t rely on AI to provide relevant and coherent information, they might turn away from these technologies altogether.

How Does Context Rot Occur?

There are a few reasons context rot occurs in AI conversations:

1. **Accumulation of Irrelevant Information**: As discussions progress, irrelevant topics can creep in. If not managed correctly, these distractions accumulate, leading to confusion.

2. **Memory Limitations**: Language models often have limits on how much context they can remember. When too much information is stored, it can lead to a dilution of the conversation’s focus.

3. **Complexity of Language**: Natural language is inherently complex and nuanced. Misinterpretations can lead to irrelevant digressions that contribute to context rot.

4. **User Input**: Sometimes, the way users frame their questions or statements can lead to misunderstandings, further exacerbating the context rot.

By identifying these factors, developers can work on solutions to minimize context rot and improve the overall experience when interacting with LLMs.

How Can We Combat Context Rot?

So, what can be done to tackle this pesky issue of context rot? Here are some strategies:

1. **Clear and Concise Prompts**: When interacting with an LLM, it’s beneficial to be as clear and concise as possible. This helps the model focus on the main topic without getting sidetracked.

2. **Context Management**: Developers can implement better context management systems within their models. This could involve prioritizing more relevant context or even allowing users to reset or edit the conversation history when necessary.

3. **User Feedback Loops**: Creating systems for users to provide feedback on AI responses can help developers understand where context rot is occurring and how to address it effectively.

4. **Training Data Improvements**: Improving the quality of the training data could also reduce context rot. If the model is trained on clearer, more focused conversations, it may be better equipped to handle user interactions without veering off track.

5. **Adaptive Learning**: Implementing adaptive learning techniques could allow models to better understand user preferences and adjust their responses accordingly, thus minimizing irrelevant context.

The Future of AI Conversations

As we move forward in the world of AI, understanding and addressing context rot will be essential. The demand for more intuitive, coherent, and relevant AI interactions will only grow.

By acknowledging the challenges posed by context rot, developers and researchers can create better models that facilitate smoother, more engaging conversations. This not only enhances user experience but also builds trust in AI technologies.

The notion of context rot, brought to light by Workaccount2 on Hacker News, serves as a reminder that as we innovate, we must also refine and adapt our approaches to ensure we’re meeting user needs.

Real-World Applications of Understanding Context Rot

The implications of context rot extend beyond just casual conversations. In professional settings, where precision and clarity are paramount, context rot can lead to misunderstandings or even significant errors. For instance, in customer service scenarios, if an AI fails to recall the context of a complaint accurately, it could result in unsatisfactory resolutions for customers.

Consider the realm of education, too. Students rely on AI for tutoring, research, and study aids. If a language model becomes disoriented during a session, it can lead to confusion and frustration, ultimately hindering the learning process.

By addressing context rot, educators can harness AI’s potential to create personalized learning experiences that truly cater to individual student needs.

Conclusion

As AI technology continues to evolve, the conversation around context rot will undoubtedly gain more traction. It’s an essential concept that highlights the importance of clarity and relevance in AI interactions. By understanding and addressing context rot, we can enhance the quality of conversations with language models, paving the way for a future where AI serves as an even more valuable resource in our daily lives.

Engaging with AI shouldn’t feel like digging through cluttered conversations. By recognizing the signs of context rot and taking proactive steps to combat it, we can improve the way we interact with these powerful tools. So, the next time you find yourself in a conversation with an AI and the responses start to feel a bit off, remember the term “context rot” and consider how you might help steer the conversation back on track!