X Takes NY to Court: First Amendment vs. Content Moderation!

X Sues New York Over Content Moderation Law: A First Amendment Challenge

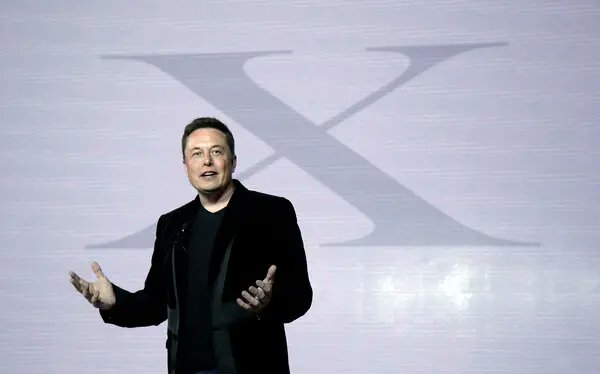

In a significant legal move, X, the social media platform formerly known as Twitter, has filed a federal lawsuit against the state of New York, arguing that the newly enacted content moderation law infringes upon First Amendment rights. The lawsuit, announced by notable entrepreneur Mario Nawfal, raises critical questions about the balance between regulating online platforms and protecting free speech.

Overview of the Lawsuit

The lawsuit stems from a recently passed law in New York that mandates social media platforms to disclose their policies and procedures regarding the moderation of content deemed as hate speech and extremism. This requirement has prompted X to take legal action, claiming that such regulations violate the First Amendment, which protects free speech and expression.

The Content Moderation Law

New York’s law aims to address concerns surrounding hate speech and extremist content online. By requiring platforms to publicly outline their moderation policies, the state seeks to hold social media companies accountable for the content that proliferates on their sites. Advocates of the law argue that transparency is essential in combating misinformation and harmful rhetoric. However, critics, including X, contend that such regulations could stifle free expression and place undue burdens on social media companies.

First Amendment Implications

The core of X’s argument lies in the First Amendment, which guarantees freedom of speech and prohibits the government from restricting expression. X asserts that the New York law imposes excessive governmental control over its content moderation practices, effectively dictating how the company should manage user-generated content. By mandating disclosure of moderation policies, X claims that the law infringes on its editorial discretion and operational autonomy.

- YOU MAY ALSO LIKE TO WATCH THIS TRENDING STORY ON YOUTUBE. Waverly Hills Hospital's Horror Story: The Most Haunted Room 502

The Broader Impact on Social Media Platforms

This lawsuit is part of a broader trend where social media platforms are increasingly confronting state and federal regulations aimed at curbing hate speech and misinformation. As concerns about online extremism grow, lawmakers across the country are proposing various regulations designed to hold platforms accountable. However, these laws often clash with the principles of free speech, raising complex legal and ethical dilemmas.

X’s Position and Future Outlook

X’s legal action against New York represents a critical moment in the ongoing debate over content moderation and free speech in the digital age. The company argues that it has a responsibility to uphold free expression while also taking steps to mitigate harmful content. However, the challenge lies in finding a balance between these competing interests.

As the lawsuit unfolds, it could set significant precedents for how social media platforms navigate content moderation regulations. Legal experts suggest that the outcome may influence similar laws in other states and shape the future landscape of online communication.

Conclusion

The lawsuit filed by X against New York underscores the complexities of regulating social media in a democratic society. As platforms grapple with their responsibilities to moderate content while safeguarding free speech, the legal battle will likely draw attention from across the nation. The implications of this case extend beyond New York, potentially affecting how social media companies operate and how they interact with regulatory frameworks aimed at curbing harmful online behavior.

Key Takeaways

- Lawsuit Filed: X has sued New York over a new content moderation law that requires disclosure of moderation practices.

- First Amendment Concerns: The lawsuit argues that the law violates First Amendment rights by imposing government control over content moderation.

- Impact on Social Media: The case highlights the ongoing tension between regulating online platforms and protecting free speech.

- Future Implications: The outcome could set significant legal precedents for content moderation laws in other states and influence how social media companies operate.

This legal battle is indicative of a broader struggle within the digital landscape, where the need for accountability often conflicts with the fundamental rights of expression. As society continues to navigate these challenges, the decisions made in this case could have lasting ramifications for both social media platforms and the users who rely on them for communication and information sharing.

BREAKING: X SUES NEW YORK OVER CONTENT MODERATION LAW@X has filed a federal lawsuit against New York, claiming the state’s new law on social media content rules violates the First Amendment.

The law demands platforms disclose how they police hate speech, extremism, and… https://t.co/hiurXgP9E6 pic.twitter.com/LPJetTf6A5

— Mario Nawfal (@MarioNawfal) June 17, 2025

BREAKING: X SUES NEW YORK OVER CONTENT MODERATION LAW

In a bold move that has captured the attention of social media users and legal experts alike, @X has filed a federal lawsuit against New York over its newly enacted law concerning social media content moderation. This lawsuit is a significant development in the ongoing debate about free speech and the role of social media platforms in regulating content. The law in question mandates that social media platforms disclose their methods for policing hate speech and extremism, raising questions about the balance between user safety and First Amendment rights.

The Lawsuit and Its Implications

@X argues that New York’s content moderation law violates the First Amendment, which guarantees freedom of speech. This legal challenge is not just about @X; it has broader implications for all social media platforms operating within the state. With the rise of misinformation and hate speech online, many states are taking steps to regulate how these platforms manage content. However, @X’s lawsuit suggests that such regulations could infringe on the rights of users and the platforms themselves.

The law requires social media companies to publicly disclose how they combat hate speech and other forms of harmful content. Critics argue that this could lead to a chilling effect on free speech, as platforms may feel pressured to censor more content than necessary to avoid scrutiny. This brings up an essential question: how do we ensure that social media remains a space for free expression while also protecting users from harmful content?

Understanding Content Moderation

Content moderation refers to the processes that social media platforms use to monitor, review, and manage user-generated content. This can involve the removal of posts that violate community guidelines, the banning of users who consistently share harmful content, and the implementation of algorithms designed to filter out hate speech and misinformation. As social media becomes an increasingly dominant form of communication, the need for effective content moderation is more critical than ever.

However, the challenge lies in finding a balance. While it’s essential to protect users from hate speech and extremism, overly aggressive moderation can lead to the suppression of legitimate discourse. @X’s lawsuit underscores the complexities involved in this issue. By demanding transparency in content moderation practices, New York lawmakers may unintentionally push platforms to adopt more draconian measures to avoid legal repercussions.

The First Amendment Debate

The First Amendment is a cornerstone of American democracy, safeguarding the right to free speech. This right is particularly important in the context of social media, where diverse opinions and discussions thrive. @X’s lawsuit highlights the tension between state regulations and constitutional rights. Supporters of the lawsuit argue that the New York law could set a dangerous precedent, allowing states to impose their own standards of acceptable speech on platforms that operate across state lines.

On the other hand, advocates for the New York law argue that it is a necessary step to combat the growing problem of hate speech and misinformation online. They contend that social media companies have a responsibility to protect their users and that transparency in content moderation practices is crucial for accountability. This debate raises vital questions about the role of government in regulating speech on private platforms and the extent to which these platforms should be held accountable for the content shared by their users.

The Role of Social Media Companies

As private entities, social media companies like @X have the right to set their own community guidelines and moderation policies. However, they also bear a responsibility to provide a safe environment for users. This delicate balance can lead to conflicts, especially when laws like New York’s come into play. The lawsuit filed by @X emphasizes this struggle, suggesting that while platforms should be accountable for their moderation practices, they should not be forced to disclose sensitive information that could compromise user privacy or lead to over-censorship.

Moreover, social media companies face immense pressure from both users and lawmakers to address the issues of hate speech and extremism. The public outcry over harmful content has led many platforms to invest heavily in content moderation systems, including employing teams of moderators and developing sophisticated AI algorithms. However, these efforts are often met with criticism from both sides of the debate: some argue that platforms are not doing enough, while others claim they are overstepping their bounds.

Public Reaction and Future Implications

The reaction to @X’s lawsuit has been mixed. Many users support the platform’s stance, viewing it as a defense of free speech in the digital age. Others, however, express concern that the lawsuit may hinder efforts to combat hate speech and misinformation online. The broader implications of this legal battle could influence how social media companies operate in the future and shape the conversation about content moderation in the years to come.

As the lawsuit progresses, it will likely draw attention from various stakeholders, including civil rights organizations, lawmakers, and legal experts. The outcome could set a significant precedent for how social media platforms interact with state regulations and how they manage user-generated content.

Conclusion: Navigating the Complex Landscape of Free Speech and Moderation

The lawsuit filed by @X against New York over the content moderation law is a critical moment in the ongoing discussion about free speech and the responsibilities of social media platforms. As we continue to navigate this complex landscape, it’s essential to consider the implications of content moderation practices, the role of the First Amendment, and the need for transparency and accountability in how platforms operate.

It’s clear that this issue is not going away anytime soon. The legal outcomes of this case could reshape the way social media companies approach content moderation, impacting users across the country. As we watch this situation unfold, it will be fascinating to see how the balance between free speech and user safety is negotiated in the digital age.

In the end, the conversation surrounding content moderation and free speech is more important than ever. It’s a dynamic issue that requires ongoing dialogue, thoughtful consideration, and a commitment to finding solutions that respect the rights of all users while promoting a safe online environment.